Action plans based on their strategic objectives and mutuality in term of data governance competence

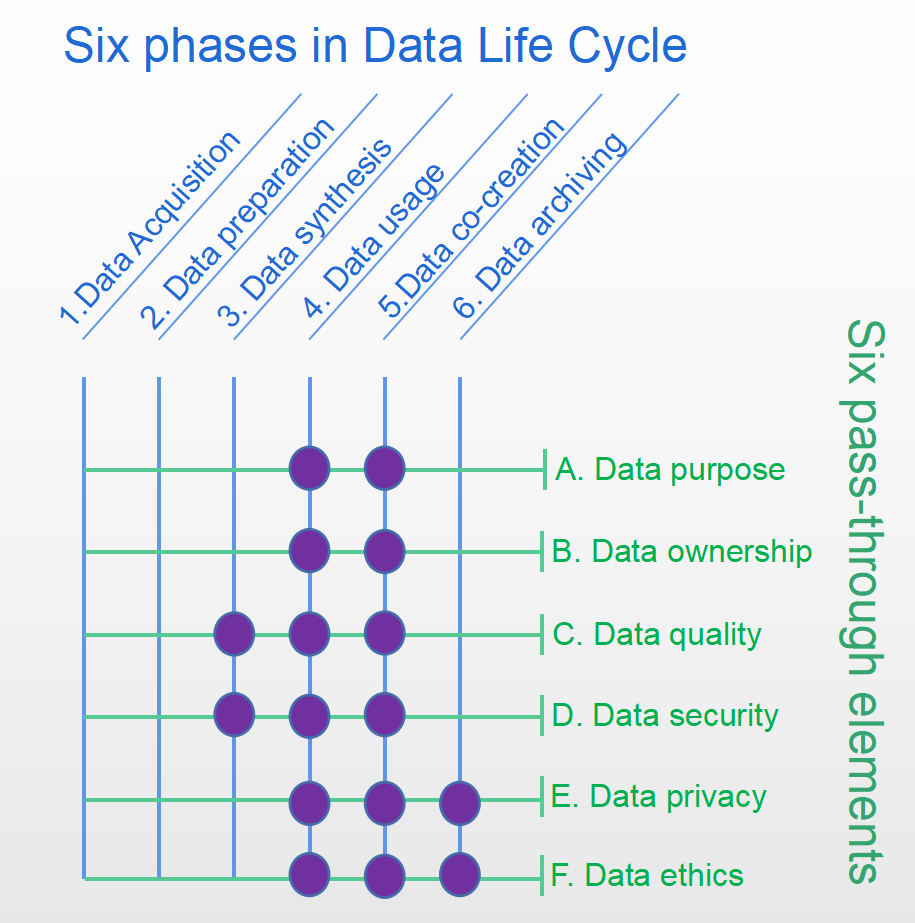

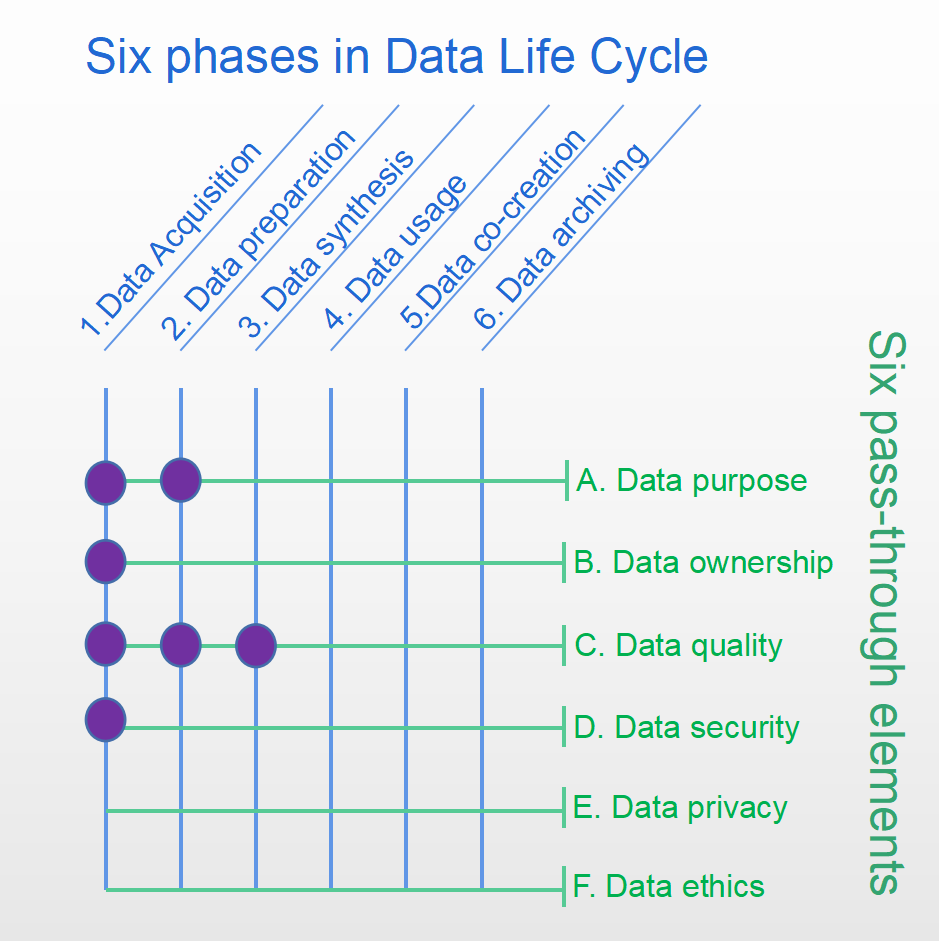

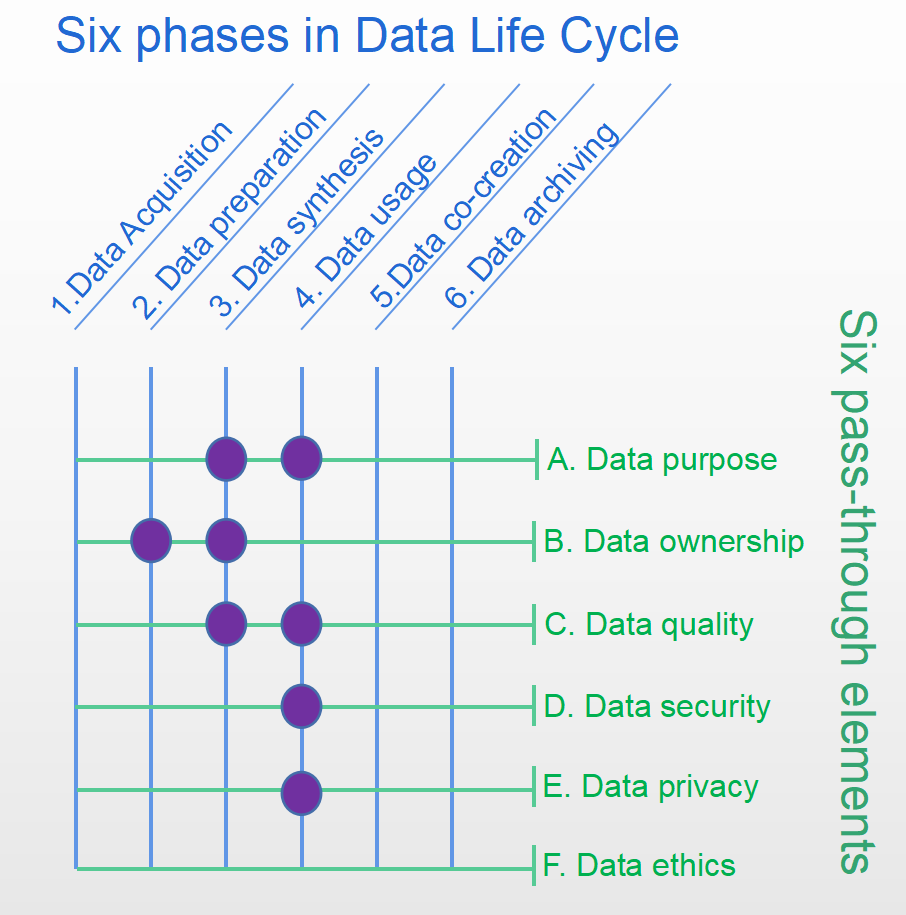

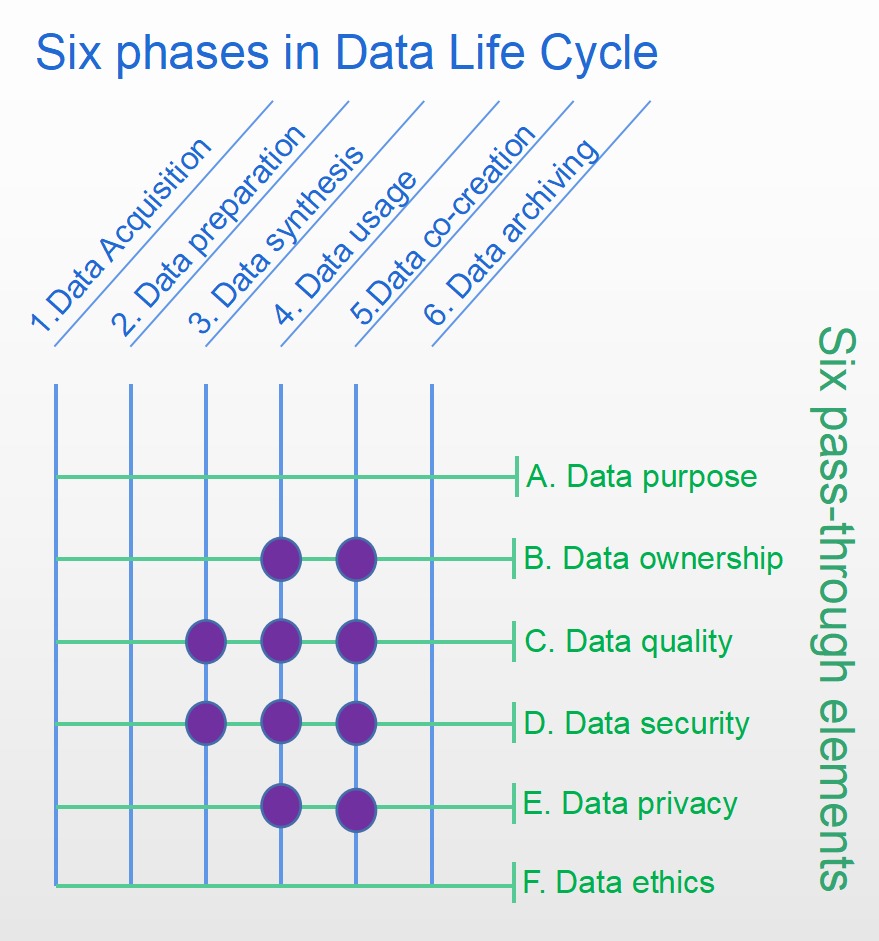

Effective data governance needs to evolve and advance from a few business units to the whole corporation, so data governance necessarily involves the “6 x 6 matrix” mentioned in Chapter 5 and its many different aspects according to the stage of digital transformation.

Corporates should formulate thier detailed action plans based on their strategic objectives, size of the business, mutuality in term of data governance competence, current technical capabilities, related talent reserve etc. Below I will describe some common pathways for enterprises and institutions.

Stage 1 – Build foundation for data governance

Dare to “defy gravity”

During this stage, many of the barriers to transformation come from people. Even when management is determined to make a digitalisation reform, it can be tough to get started. One of the main obstacles is the participants’ fear of losing turf or power.

To address this issue, first, we need to start with a clear data strategy of the enterprise, but more importantly, a clear description of the concept of the data lifecycle in the enterprise and let participants understand the long-term benefits that can be derived from “nourishing” data resources. However data lifecycle and data governance practise in this stage is not so clear.

In bringing data governance back to order from the disorganised state of the past, there is a definite need to establish a strong organisation within the enterprise to coordinate and drive things forward.

From my consulting work over the past few years, I have also learned that digital transformation can lead to deep-rooted changes in management within an organisation.

I often compare digital transformation to a “defy-gravity” process as people are long used to working in a non-data-driven environment. Based on my experience, I would recommend that corporates prioritise the following items during the initiation period:

● Promote standardisation in data calibre to ensure consistency, interpretability and usability of data metrics.

It is relatively common for multiple versions of the same metric to appear within an organisation. If you don’t even have standardised and accurate data to base your business analysis on, what’s the point of talking about digitalisation transformation and business intelligence?

I have seen executives demoted because of analytical errors caused by inaccurate data metrics, only to find out afterwards that the problem was in the data calibre.

This shows that inconsistencies in data metrics can lead to enormous potential problems. To establish a standard data metrics and build a unified data service, a comprehensive data metrics system must be designed and built jointly with the business, R&D and data teams.

As the starting point of the data lifecycle and the earliest entry point to data, data collection and dispatching should be considered for normalisation or standardisation as early as possible. One important thing is that normalisation of data collection has a time cost. (i.e. change of people’s mindset, Data governance difficulty under legacy issues)

When I was working at Alibaba, there were three software development kits for collecting mobile app data within the group, and three separate teams maintained them. The teams were at odds, resulting in a year and a half to finally complete the integration. If this part is done right, then a lot of potential data governance costs can be saved.

● Data security is different from network security, and internal security risks are a key concern. In the early days of transformation, the security of internal data access was often overlooked, including the setting of internal data permissions. I’ve seen dozens of engineers share the same “universal” passwords across a department. Despite personnel changes, the authorisation remains in place. Once, when auditing the API rights management in a group, I found that no single system could accurately identify data content and users over thousands of APIs that already in use. Some of the APIs were even open to the public without approval.

●The security issues involved in compliance risk cannot be underestimated. In many large conglomerates, external data was introduced on a case-by-case basis in the early days. From a cost control perspective, the introduction of external data should be centrally managed. However, with the increasing complexity of internal and external data integration and the increased compliance risks, the security of data access is no longer simply a compliance issue but may even expose the company to certain legal risks.

A data resource directory is a core element of good data governance and is strategically essential for managing data assets, improving data analysis quality, and productivity. A resource directory can start with data suppliers and then be reconciled using a business perspective. Given the externality nature of Big Data, the introduction of an external data directory and its scalability should also be considered at the same time. Each data source in the enterprise and its usage should be connected to the data directory in real-time for monitoring and review.

Stage 2 – Value-creation potential in data governance

Gradual implementation of the data governance around the data lifecycle

Based on Stage 1, enterprises should focus on strengthening their technical capabilities and the level of refinement in formulating data governance standards, specifications and systems, complemented by a horizontal steering organisation supported by top management, for gradual implementing around the data lifecycle.

Generalisation of data governance

- Construction of standard data metrics: In order to establish a unified data metrics system and build integrated data services, it is necessary to incent the business, R&D and data teams to act together for a comprehensive data metrics system.

The process of building a complete, accurate and efficient metrics system relies on the team’s true understanding of the business, which can be a very long process. Taobao, for example, took years of learning before it finally came up with over 600 metrics to fully describe its day-to-day operations – a process of “setting things right”. The establishment of the metrics system needs to be verified in the course of business operations, and its level of refinement will gradually improve as the data analysis skills of business personnel strengthen.

- Change management: In the Big Data era, data sources can be changed frequently due to the massive, multi-source and heterogeneous nature of the data itself. Each part of the data lifecycle (six phases x six elements) should be strengthened to ensure that corporates understand the various situations of data source changes.

Identify the types of data source changes and their potential impact to be categorised and notify users as early as possible to enable them to develop targeted response strategies. In addition to changes to the content of data sources, be aware of changes to compliance rules.

- Security management: Uneven levels of data security can be a potential risk or lead to possible security breaches; it is, therefore, necessary to establish standards and baselines for the centralised management of data/information security in the company. The specific content of the data security management baseline should be developed, taking into account the appropriate management requirements for each phase of the data lifecycle to arrive at a unified specification.

- Governance guideline: To provide employees with a clearer understanding of implementing a strategy that prioritises data assets and security, corporates must have different data governance guidelines, for example, guidelines on user privacy protection, data security classification management, data sharing policy, etc.

Enhancing core technical competencies

Reduce costs and increase efficiency: from isolated works on data technology to orderly and centralised management. The first step must start cluster resources auditing. All application jobs in the cluster are counted according to the department to which they belong, the computational and storage resources consumed by the jobs are audited. Cost is then allocated according to the sharing strategy. This aims to improve the efficiency and quality of data development, increase model usage, reduce duplication of development work and storage, and reduce the overall cost.

Recognising the core data content through the data inventory review will help to quickly enhance data development, modelling, operations and service capabilities, which will reduce the duplication of investment by individual business units for digitalisation. This does not mean that departments should not have their own data technology capabilities, but having identified the core (generic) components that need to be strengthened, we will have a better understanding of what the non-generic components are and what individual departments need to do.

Finding the core technology of collaborative and co-construction is a process of self-discovery. In the beginning, it is advisable to start with businesses that have a high impact and a large amount of data. Monitor them first to ensure that problems can be detected at the first opportunity. Do not underestimate the difficulty of identifying the common core technology platform; in many businesses, this process of ‘separating the wheat from the chaff’ (elimination) can take years to go through.

In fact, ‘turf protection mentality’ is a normal phenomenon in large corporations, but the technical barriers created can lead to the fragmentation of core resources.

Don’t think that duplication is no big deal; it is the root cause of data silos. When I was at Alibaba, several departments were rushing to become the group’s core data platform, so they all invested a lot of resources in building it, only to find that the core functions of these data platforms were primarily similar. The sooner management is aware of the situation, the more they can reduce the redundancy cost of platform construction.

Once the decision has been made to build the core technology platform, moving forward with the transition from old to new is another crucial challenge. Sorting through the organisation’s existing technology platforms, then scheduling and making a list of the migration tasks, and cautiously assessing the overall short-term impact while not affecting team morale. During the transition from old to new, resources will be even tighter but, this is also a perfect time to standardise data capabilities that cannot be missed.

Data lifecycles are more than one in most enterprises. For example, Alibaba’s Taobao and Alipay have their data life cycle, and they also have data to exchange within the group. Among them are problems such as complex decision processes in terms of data sharing and resources not being maximised.

As you can imagine, separate data lifecycle management is not a simple task in itself. There is plenty of room for optimisation to simultaneously ensure the security of sensitive data in circulation and enhance the automation of each part of the lifecycle.

Stage 3 – Data governance at scale, the emergence of Data Facilitation Platform(DFP)

Data governance aims to optimise the production and processing of data. Metadata plays an important role in governing data at scale within a data lifecycle by providing information about the data being governed. It is the best way to verify that the data lifecycle is working well and avoid the mere talks about the data governance framework without adequate measurement. The effective management of a metadata is one of the essential activities of a data steward within a data governance practice, enabling data management policy, managing data to focuses on the production, and usage of data. Eventally turn data into an enterprise-wide data asset.

Metadata also known as “data of data”. Properly managed, metadata makes data precise, more stable and traceable during the production process. Metadata records all data elements of the data production process (similar to supply chain), including scheduling, quality assurance, security monitoring and data “umbilical lineage” during the life cycle. Data is monitored throughout its lifecycle, cost managed or apportioned, the data value is tracked, and data is traceable from production to service.

Equip Data governance with Data Facilitation Platform(DFP) is the starting point for full scale digital transformation. It is also essential to understand the importance of data governance, which can save corporates up to 70% of data production and processing workload. The reason for this is simple. The DFP was established to enable the data lifecycle to operate more effectively based on data governance principles, which serves data analytics, machine learning, data services, and data products. In the next chapter, we will continue our discussion of the DFP.

Three major traps that must be overcome before digital transformation goes full scale

Digital transformation has always been a challenge and is the core business strategy of many corporates. In today’s business environment, the ability to drive the data governance framework of the data lifecycle from the individual business units to the whole corporate has become a key factor in the success of digital transformation and a major determinant of the competitiveness of corporates in the future. However, in digital transformation, several pitfalls can lead managers astray regarding data consolidation, governance, and framework formation.

Trap one: Lack of a long-term vision on data-drivenness

Planning for the future is one of the most challenging decisions for managers, especially in uncertain times like today. Even though the economy has started to recover, there are still too many variables that make it impossible to control the situation and even if you have a plan in place for next month, it could be affected at any time, let alone a long-term plan like three or even five years. As well as considering contingency solutions, corporates should look within for the answer.

Amazon.com founder Jeff Bezos was once asked at Amazon’s annual shareholder meeting how to develop a long-term plan; his answer was simple: identify what will not or should not be changed in the business, and use that to guide the company’s long-term strategy.

Bezos believes that there are many things that Amazon is firmly committed to in the company’s long-term vision; the most important of all is to always put the customer first, rather than competition. Technology, including Big Data, is simply a tool to achieve this goal.

Amazon’s confidence in its Big Data capabilities is evident. Since the beginning of the business, Amazon has taken a data-driven approach to optimising the customer experience, including using data models to determine the frequency and speed of customer clicks to predict their purchase intentions. Recently, Amazon has also invented an extraordinary arrangement to deliver items they “anticipate” customers might want to their homes even before they purchase them.

On the other hand, Jack Ma stated that Alibaba’s mission is facilitating the world to conduct business; and insist on assigning priority as customers first, employees second, and shareholders third. He stressed that while in the 1980s, money was made by courage; in the 1990s, it was made by connections; in this era, it must be made by knowledge.

Alibaba’s success is related to the successful implementation of its data strategy, but without this unwavering sense of mission, all technology and Big Data resources will only be a sheet of loose sand.

Therefore, corporates should not easily change the vision they believe in and the mission, “priority in digitalisation and people-oriented approach”, simply because the world is in a state of chaos. In times of unpredictable changes, it is worthwhile to do a soul searching on what is worth persisting and holding on to.

According to Lao Tzu, a large tree grows from tiny saplings; a nine-storey high platform is raised from buckets of soil; a journey of a thousand miles starts from the feet. Lao Tzu illustrated that things grow from small to large, from weak to strong. It is easier to solve a problem when it first appears; it is easier to dissipate a bad thing when it is fragile and small. If we do a good job of prevention and elimination, we will get rid of the mischief before it happens.

Trap two: Ignoring the value of data connectivity

The second challenge of digital transformation is that companies that have collected, dispatched, deployed and accessed data have mostly not considered the correlation between the multidimensional nature of data. For example, marketing data is mainly used by the marketing team, customer complaint data is in the customer service department, and the risk control department controls risk control data. In contrast, a multidimensional analysis could find quality customers more effectively if the data was fully integrated across departments. Unfortunately, the negative impact of unlinked data on the business is less obvious, and each department would prefer to build its system and be satisfied with being self-sufficient. While the business operations of each department may be successful, they are contrary to the principles and direction of the digital economy era and thus only drift further and further apart.

Given the trend, data must be linked together to generate significant value.

Trap three: Overconfidence in internal data having contextual meaning

The final pitfall concerns the ability of corporates to use externally acquired data to compensate for the shortcomings of their existing data, adding context to the internal data to enhance its explanatory power. In the concept of Big Data, isolated data points are often useless on their own, and it isn’t easy to achieve goals with individual data points. For example, within a variety of cases such as understanding the spread of an epidemic, simply looking at the number of new cases and deaths does not help predict the spread and impact of the virus; by adding data such as population movement (i.e. GPS location movement per case) as a complementary explanation, we can more accurately identify the path and speed of the spread of the virus. Adding contextualisation and data extension will facilitate in-depth analysis and prediction of the data.

Business leaders now need to revisit their digital transformation strategies to truly leverage and integrate their own and external data to outperform their competitors. One of the goals of digital transformation is to find the appropriate scope of quality data to make trustworthy business decisions.

Suppose no attention is given to solving the above 3 traps. In that case, the data governance framework of the data lifecycle cannot be established, the data cannot be re-use and evolved, and the digital transformation of enterprises will be reduced to “wood without roots” and “water without source”. What have been done is only superficial and cannot create sustainable value.